I have deployed countless RKE2 clusters on AWS GovCloud and have the process down to about 10 minutes for a HA cluster in our production environments. Azure is a different can of worms. The root cause of the difficulty is still a little foggy to me, but after copious amounts of rage I was able to get a cluster up on Azure Government, and the following is my story.

Pre-requisite Knowledge

You will need a foundational understanding of the following:

- Terraform

- Terragrunt

- Linux shell scripting (bash)

- Cloud things (in this case Azure resources)3

WARNING: This module is still a work in progress and we are actively collecting feedback, it is not recommended for any production workloads just yet. it is not recommended for production workloads at this time.

rke2 is lightweight, easy to use, and has minimal dependencies. As such, there is a tremendous amount of flexibility for deployments that can be tailored to best suit you and your organization's needs.

This repository is intended to clearly demonstrate one method of deploying rke2 in a highly available, resilient, scalable, and simple method on Azure Government. It is by no means the only supported solution for running rke2 on Azure.

We highly recommend you use the modules in this repository as stepping stones in solutions that meet the needs of your workflow and organization. If you have suggestions or areas of improvements, we would love to hear them!You need to be root.

Everything you will be doing is going to require modifications to files requiring sudo access, so for the ease of doing all of this make sure you start by becoming root

sudo suTo get this module to work you must select 1 server and rerun 01_rke.sh it in /var/lib/cloud/instances/$INSTANCE/scripts/01_rke2.sh on the subsequent server, nodes to get them to join the cluster.

The agents module, however, works just fine in joining the cluster once a master is present.

Example Terragrunt

locals {

name = "rke2-vault"

tags = {

"Environment" = local.name,

"Terraform" = "true",

}

}

# Terragrunt will copy the Terraform configurations specified by the source parameter, along with any files in the

# working directory, into a temporary folder, and execute your Terraform commands in that folder.

terraform {

source = "git::github.com/rancherfederal/rke2-azure-tf.git"

}

# Include all settings from the root terragrunt.hcl file

include {

path = find_in_parent_folders()

}

generate "provider" {

path = "provider.tf"

if_exists = "overwrite"

contents = <<EOF

provider "azurerm" {

version = "~>2.16.0"

environment = "usgovernment"

features{}

}

terraform {

backend "azurerm" {}

}

EOF

}

# path to a pre-existing Azure VNET terragrunt directory

dependency "network" {

config_path = "../../../network/vault_vnet"

mock_outputs = {

vnet_id = "/some/long/stupid/id",

vnet_location = "location",

vnet_name = "crazy",

vnet_subnets = ["id_1", "id_2"],

vnet_address_space = ["10.0.0.0/12"]

}

}

# Path to a pre-existing Azure Resource Group

dependency "resource_group" {

config_path = "../../../resource_groups"

mock_outputs = {

ids = ["ids"],

locations = ["locations"],

names = ["name-1", "name-2"]

}

}

inputs = {

cluster_name = "vault-servers"

resource_group_name = dependency.resource_group.outputs.names[4]

virtual_network_id = dependency.network.outputs.vnet_id

subnet_id = dependency.network.outputs.vnet_subnets

admin_ssh_public_key = file("../rke.pub")

servers = 3

priority = "Regular"

tags = local.tags

vm_size = "Standard_F2"

enable_ccm = true

location = dependency.resource_group.outputs.locations[4]

resource_group_id = dependency.resource_group.outputs.ids[4]

upgrade_mode = "Manual"

rke2_version = "v1.20.7+rke2r1"

subnet_ids = dependency.network.outputs.vnet_subnets

}

Dependencies

This guide leverages terragrunt to create the required components for an end-state in which RKE2 is deployed on Azure Government. The components required are as follows:

- Azure VNET and subnets within that VNET

- Azure Resource Group, which will provide your location as well

- Azure NAT Gateway and NAT Gateway public IP

Inputs

To configure the deployment to your specific environment you will need to provide inputs to the variables defined in the RKE2 module (see variables.tf). Let's break down what these inputs are for.

cluster-name: this is somewhat self-explanatory, but for the sake of being thorough, this variable input provides the name value for your RKE2 clusterresource_group_name: this refers to the Azure Resource Group in which your new cluster will reside.virtual_network_id: this variable is theidof the Azure VNET in which your resources will be deployed. In the example above, a VNET was created with a separate module and called into thisterragrunt.hclusing the dependency block. The dependency provides outputs that can be called upon to pass values into the inputs for the RKE2 module (or any other module in which a VNETidwould need to be called.admin_ssh_key: in order tosshinto the nodes, after you deploy RKE2, you will need to pass the public key of an SSH keypair so that the~/.ssh/authorized_keysfile can permit you tosshonce the VMs are running. As you can see in the example above, I have placed the public key I wish to use in the parent directory of thisterragrunt.hclso that the corresponding private key will grant me access to the underlying host OS, which is paramount to getting this up and running.servers: this input defines how many master nodes you wish to deploy via an Azure ScaleSet. The process to get the master nodes online in Azure Government is semi-manual, so be mindful of your needs when setting the number of servers you wish to deploy.priority: set this to"Regular". Optionally you may set this to"Spot"but this is not recommended for production. Please read more on Azure's spot pricing.tags: this is pretty straightforward. This is a list of tags you would like to add to your instance. In this example, alocalsblock is defined at the top of thisterragrunt.hclwhich is called the value for thetagsinput.vm_size: please see Azure VM sizing to determine your computational needs and then set this value. Standard_F2 has been sufficient for a 3 master node 5 worker node deployment of RKE2.enable_ccm: setting this totrueensures that your cluster has the permissions it needs to create a role for itself (masters and workers) so that it can write to the Azure KeyVault and read the cluster join token that is required to register all the nodes into a single cluster.location: this is the region your vnet resides and where your RKE2 cluster will be deployed. For example,usgovvirginiais the region being assigned through the dependencyresource_group.resource_group_id: exactly what the name says it is.upgrade_mode: this was a tricky one for me."Automatic"is desired, however when set to automatic upgrade mode, there were errors caused by other values in the Terraform module itself. This is still being worked out and will be fixed.rke2_version: This is a string value that references a release from the RKE2 Github. I was unsuccessful with prior releases of RKE2 in my Azure environment but found success starting withv1.20.7release 1. You can see all the releases here: RKE2 Releasessubnet_ids: in my deployment, my subnets were created elsewhere, so I call theidsin via a dependency. Currently, only one subnet is being used to deploy all nodes. I am still working on making zone balancing and multiple subnets a feature for future deployments.

The Manual Stuff

Sometimes it's inevitable when the people writing your automation are still working on said automation, you must improvise and work on your experience and understanding to get things working. Hopefully, this guide will help this understanding and guide you in your Azure Government journey so that the pain is lessened. I will provide you with my story and my solutions to get this Terraform module to produce RKE2 on Azure Government.

Azure Government is not Azure. So disabuse yourself of that notion and this process will be much easier for you as you will be gifting yourself an open mind moving forward.

For the sake of brevity I'll start with this:

In a git repository where we store all our infrastructure configurations, I created a workspace for myself to deploy RKE2. I had already created a VNET and a NAT Gateway (the terragrunt.hcl above shows you the relative paths to these dependencies). To get rolling you should do the same.

mkdir rke2-azure && cd rke2-azure

mkdir masters

mkdir agents

touch agents/terragrunt.hcl # this will need to be populated with your configurations

touch masters/terragrunt.hcl # as will this

# you have created a blank terragrunt file for your agent and masters to start your configuration that will leverage the RKE2 module

# ssh-keygen -t rsa -C "rke2"

# make sure to save the private key in a place that is not tracked by git...if you don't that is on you. I reccomend ~/.ssh/

cd masters

terragrunt plan

You will need to be authenticated for terragrunt to work. You can set environment variables or use the az command to log in. You can see how to do that in this documentation.

When you have deployed your masters, they will not come online. There is a bug in the leader election process that is still being investigated and worked out, however luckily for us all, everything you need to be present on the nodes will be there thanks to the legwork already done in the Terraform module.

So what do you do about this? Let's start small. Let's get one master node online so that we have a leader that the other master nodes can join.

ssh rke2@$IP_OF_NODE where $IP_OF_NODEis one of the master nodes. This will be your leader. In the module under modules/custom_data/files/rke2_init.sh the following file exists. This file is present on the node you have just shelled into.

cd /var/lib/cloud/instance/$INSTANCE_ID/scripts

vim 01_rke2_init.sh

You should be editing this file:

#!/bin/bash

export TYPE="${type}"

export CCM="${ccm}"

# info logs the given argument at info log level.

info() {

echo "[INFO] " "$@"

}

# warn logs the given argument at warn log level.

warn() {

echo "[WARN] " "$@" >&2

}

# fatal logs the given argument at fatal log level.

fatal() {

echo "[ERROR] " "$@" >&2

exit 1

}

config() {

mkdir -p "/etc/rancher/rke2"

cat <<EOF > "/etc/rancher/rke2/config.yaml"

# Additional user defined configuration

${config}

EOF

}

append_config() {

echo $1 >> "/etc/rancher/rke2/config.yaml"

}

# The most simple "leader election" you've ever seen in your life

elect_leader() {

access_token=$(curl -s '<http://169.254.169.254/metadata/identity/oauth2/token?api-version=2018-02-01&resource=https%3A%2F%2Fmanagement.azure.com>' -H Metadata:true | jq -r ".access_token")

read subscriptionId resourceGroupName virtualMachineScaleSetName < \\

<(echo $(curl -s -H Metadata:true --noproxy "*" "<http://169.254.169.254/metadata/instance?api-version=2020-09-01>" | jq -r ".compute | .subscriptionId, .resourceGroupName, .vmScaleSetName"))

first=$(curl -s <https://management.core.usgovcloudapi.net/subscriptions/$${subscriptionId}/resourceGroups/$${resourceGroupName}/providers/Microsoft.Compute/virtualMachineScaleSets/$${virtualMachineScaleSetName}/virtualMachines?api-version=2020-12-01> \\

-H "Authorization: Bearer $${access_token}" | jq -ej "[.value[]] | sort_by(.instanceId | tonumber) | .[0].properties.osProfile.computerName")

if [[ $(hostname) = ${first} ]]; then

SERVER_TYPE="leader"

info "Electing as cluster leader"

else

info "Electing as joining server"

fi

}

identify() {

info "Identifying server type..."

# Default to server

SERVER_TYPE="server"

supervisor_status=$(curl --max-time 5.0 --write-out '%%{http_code}' -sk --output /dev/null <https://$>{server_url}:9345/ping)

if [ $supervisor_status -ne 200 ]; then

info "API server unavailable, performing simple leader election"

elect_leader

else

info "API server available, identifying as server joining existing cluster"

fi

}

cp_wait() {

while true; do

supervisor_status=$(curl --write-out '%%{http_code}' -sk --output /dev/null <https://$>{server_url}:9345/ping)

if [ $supervisor_status -eq 200 ]; then

info "Cluster is ready"

# Let things settle down for a bit, not required

# TODO: Remove this after some testing

sleep 10

break

fi

info "Waiting for cluster to be ready..."

sleep 10

done

}

fetch_token() {

info "Fetching rke2 join token..."

access_token=$(curl '<http://169.254.169.254/metadata/identity/oauth2/token?api-version=2018-02-01&resource=https%3A%2F%2Fvault.usgovcloudapi.net>' -H Metadata:true | jq -r ".access_token")

token=$(curl '${vault_url}secrets/${token_secret}?api-version=2016-10-01' -H "Authorization: Bearer $${access_token}" | jq -r ".value")

echo "token: $${token}" >> "/etc/rancher/rke2/config.yaml"

}

upload() {

# Wait for kubeconfig to exist, then upload to keyvault

retries=10

while [ ! -f /etc/rancher/rke2/rke2.yaml ]; do

sleep 10

if [ "$retries" = 0 ]; then

fatal "Failed to create kubeconfig"

fi

((retries--))

done

access_token=$(curl '<http://169.254.169.254/metadata/identity/oauth2/token?api-version=2018-02-01&resource=https%3A%2F%2Fvault.usgovcloudapi.net>' -H Metadata:true | jq -r ".access_token")

curl -v -X PUT \\

-H "Content-Type: application/json" \\

-H "Authorization: Bearer $${access_token}" \\

"${vault_url}secrets/kubeconfig?api-version=7.1" \\

--data-binary @- << EOF

{

"value": "$(sed "s/127.0.0.1/${server_url}/g" /etc/rancher/rke2/rke2.yaml)"

}

EOF

}

pre_userdata() {

info "Beginning user defined pre userdata"

${pre_userdata}

info "Beginning user defined pre userdata"

}

post_userdata() {

info "Beginning user defined post userdata"

${post_userdata}

info "Ending user defined post userdata"

}

{

pre_userdata

config

fetch_token

# if [ $CCM = "true" ]; then

# append_config 'cloud-provider-name: "azure"'

# fi

if [ $TYPE = "server" ]; then

# Initialize server

identify

cat <<EOF >> "/etc/rancher/rke2/config.yaml"

tls-san:

- ${server_url}

EOF

if [ $SERVER_TYPE = "server" ]; then

append_config 'server: <https://$>{server_url}:9345'

# Wait for cluster to exist, then init another server

cp_wait

fi

systemctl enable rke2-server

systemctl daemon-reload

systemctl start rke2-server

export KUBECONFIG=/etc/rancher/rke2/rke2.yaml

export PATH=$PATH:/var/lib/rancher/rke2/bin

# Upload kubeconfig to s3 bucket

upload

else

append_config 'server: <https://$>{server_url}:9345'

# Default to agent

systemctl enable rke2-agent

systemctl daemon-reload

systemctl start rke2-agent

fi

post_userdata

}

At the moment the CCM variable appends the configuration in /etc/rancher/config.yaml with cloud-provider-name: azure which passes an argument to the kublet that will cause rke2 to panic and crash.

In order to stop this wretchedness, make sure that your script has this conditional check commented out:

if [ $CCM = "true" ]; then

append_config 'cloud-provider-name: "azure"'

fi

## Like this

# if [ $CCM = "true" ]; then

# append_config 'cloud-provider-name: "azure"'

# fiMake sure this is not present in this script or the config.yaml. In order to get the first node up, we need to hardcode this script to be the SERVER_TYPE of leader. We modify the first part of the script like so:

#!/bin/bash

export TYPE="${type}"

export CCM="${ccm}"

export SERVER_TYPE="leader"

Next, we must comment out a conditional that tries to determine the leader in a cluster like so:

# Original

if [[ $(hostname) = ${first} ]]; then

SERVER_TYPE="leader"

info "Electing as cluster leader"

else

info "Electing as joining server"

fi

# change to

# if [[ $(hostname) = ${first} ]]; then

# SERVER_TYPE="leader"

# info "Electing as cluster leader"

# else

# info "Electing as joining server"

# fi

Commenting this out will rid our script of any logical attempt to determine the leader (which in this case we want). For the first node we bring online, we need to force it to be the leader prior to joining our other masters. There is still work to do before we are able to run this script, however. Next, we need to remove instances of the SERVER_TYPE variable so that our global variable is not overwritten within one of the other functions.

identify() {

info "Identifying server type..."

# Default to server

SERVER_TYPE="server"

supervisor_status=$(curl --max-time 5.0 --write-out '%%{http_code}' -sk --output /dev/null <https://$>{server_url}:9345/ping)

if [ $supervisor_status -ne 200 ]; then

info "API server unavailable, performing simple leader election"

elect_leader

else

info "API server available, identifying as server joining existing cluster"

fi

}

Now just comment out SERVER_TYPE

# comment out SERVER_TYPE

# Default to server

# SERVER_TYPE="server"

supervisor_status=$(curl --max-time 5.0 --write-out '%%{http_code}' -sk --output /dev/null <https://$>{server_url}:9345/ping)

if [ $supervisor_status -ne 200 ]; then

info "API server unavailable, performing simple leader election"

elect_leader

else

info "API server available, identifying as server joining existing cluster"

fi

}

You now can run 01_rke2-init.sh with ./00-rke2-init.sh

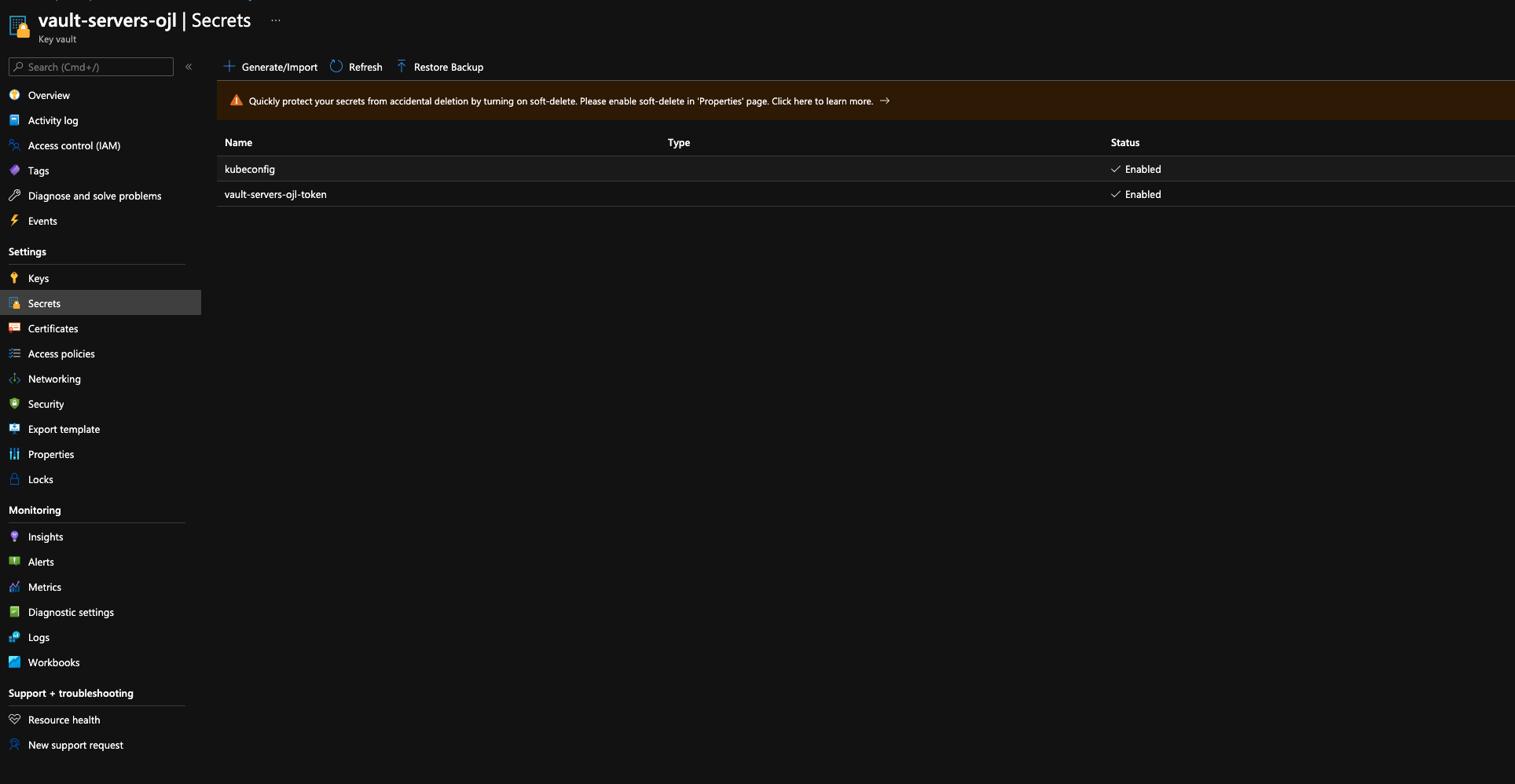

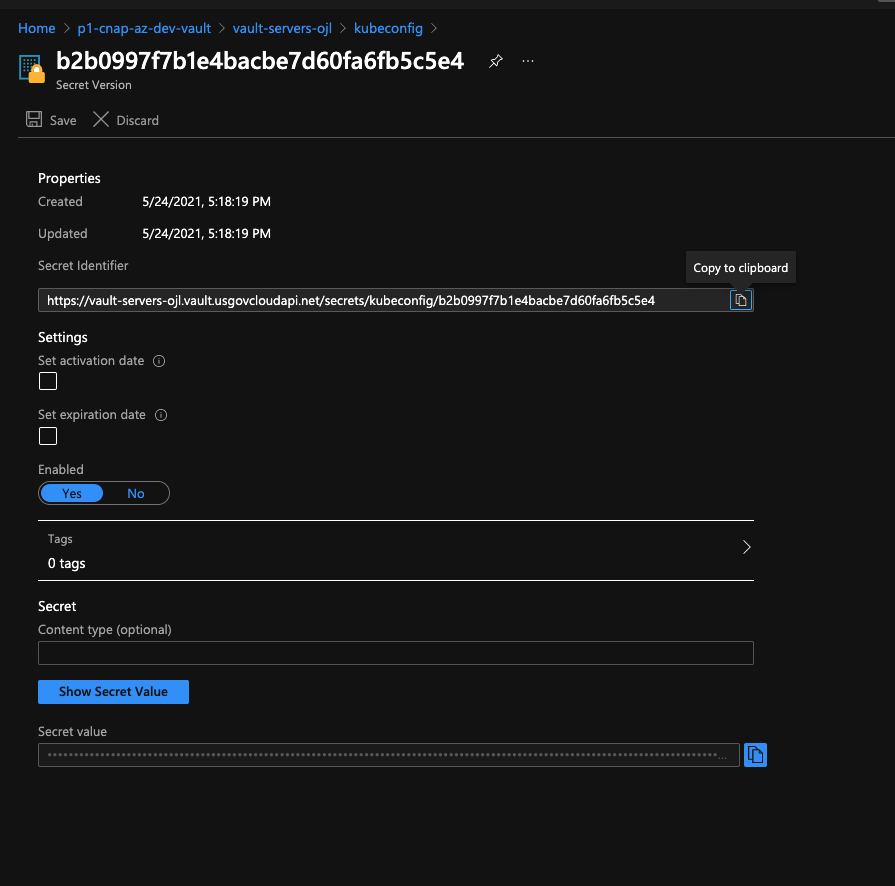

If this is successful your kubeconfig will be written to the Azure Key Vault which is created when you ran terragrunt apply. You may need to manually add an access policy to allow your user account (your Azure Active Directory) account to read the data within Key Vault. Once you have access to read the secrets in Key Vault you will be able to obtain the cluster's kubeconfig.

You can simply click on the copy icon to the right of the "Secret value" field and paste the contents in a file within the ~/.kube directory or you can inspect the contents by clicking "Show Secret Value".

In order to get RKE2 to fully get up and running, you will need to restart the rke2-server service. To do this run:

systemctl stop rke2-server

systemctl start rke2-server

# wait a few minutes then check that the control plane port is open and listening on 6443

netstat -putan

IMPORTANT:

If you are having issues with the rke2-server listening on 6443 check your config.yaml in /etc/rancher/rke2 directory.

root@vm-p1cnapazurevaultb2zserver000000:/etc/rancher# cat rke2/config.yaml

# Additional user defined configuration

token: uisWPiDecp6aH8OkJDzw1OQCEEpBMb9GR9PETKY4

cloud-provider-name: "azure"

tls-san:

- 10.201.4.5

REMOVE the line cloud-provider-name: "azure" from this config file and try restarting the service again.

To bring up the remaining master nodes the process is similar to the one above. You will need to modify the [00-rke2-init.sh](<http://00-rke2-init.sh>) script on each node and follow the same procedure as above. the only difference being the value of SERVER_TYPE. For the remaining nodes set this to the value of "server". The top of your script should look like this:

#!/bin/bash

export TYPE="${type}"

export CCM="${ccm}"

export SERVER_TYPE="server"

You can also leave SERVER_TYPE uncommented in the identify() function. You still will need to comment out the conditional in the elect_leader() function. Effectively we are rigging the election and telling our cluster which node is the leader and which are the joining servers.

IMPORTANT!!!: You need to ensure everything is actually up and running and that the host is listening on port 6443 before trying to join the next node. If netstat -putan does not show 6443 then you need to stop and then start the rke2-server service.

Deploying the Agents

Luckily, the agents are much friendlier than the master nodes. Recalling our directory structure we should still be in the masters directory. You need to now traverse into the agents directory where your blank terragrunt.hcl resides.

cd ../agents

$EDITOR terragrunt.hcl

In the context of my infrastructure resources, I have this in my terragrunt.hcl file:

locals {

name = "rke2-vault-agents"

tags = {

"Environment" = local.name,

"Terraform" = "true",

}

}

# Terragrunt will copy the Terraform configurations specified by the source parameter, along with any files in the

# working directory, into a temporary folder, and execute your Terraform commands in that folder.

terraform {

source = "git::<https://github.com/rancherfederal/rke2-azure-tf.git//modules/agents>"

}

# Include all settings from the root terragrunt.hcl file

include {

path = find_in_parent_folders()

}

generate "provider" {

path = "provider.tf"

if_exists = "overwrite"

contents = <<EOF

provider "azurerm" {

version = "~>2.16.0"

environment = "usgovernment"

features{}

}

terraform {

backend "azurerm" {}

}

EOF

}

/* generate "resource" {

path = "resource.tf"

if_exists = "overwrite"

contents = <<EOF

resource "null_resource" "kubeconfig" {

depends_on = [module.rke2]

provisioner "local-exec" {

interpreter = ["bash", "-c"]

command = "az keyvault secret show --name kubeconfig --vault-name ${token_vault_name} | jq -r '.value' > rke2.yaml"

}

}

EOF

} */

dependency "servers" {

config_path = "../rke2"

mock_outputs = {

cluster_data = {

name = "cluster-name",

server_url = "derp",

cluster_identity_id = "something",

token = {

vault_url = "mock",

token_secret = ""

}

token_vault_name = "vault-something"

network_security_group_name = "soaspfas"

}

}

}

dependency "network" {

config_path = "../../../network/vault_vnet"

mock_outputs = {

vnet_id = "/some/long/stupid/id",

vnet_location = "location",

vnet_name = "crazy",

vnet_subnets = ["id_1", "id_2"],

vnet_address_space = ["10.0.0.0/12"]

}

}

dependency "resource_group" {

config_path = "../../../resource_groups"

mock_outputs = {

ids = ["ids"],

locations = ["locations"],

names = ["name-1", "name-2"]

}

}

inputs = {

name = "vault-agents"

resource_group_name = dependency.resource_group.outputs.names[4]

virtual_network_id = dependency.network.outputs.vnet_id

subnet_id = dependency.network.outputs.vnet_subnets

admin_ssh_public_key = file("../rke.pub")

instances = 5

priority = "Regular"

tags = local.tags

upgrade_mode = "Manual"

enable_ccm = true

spot = false

vm_size = "Standard_F2"

rke2_version: "v1.20.7+rke2r1"

os_disk_storage_account_type = "Standard_LRS"

cluster_data = dependency.servers.outputs.cluster_data

location = dependency.resource_group.outputs.locations[4]

token_vault_name = dependency.servers.outputs.token_vault_name

zone_balance = false

}

The most notable difference in this configuration from the master node configuration is the declaration of the token_vault_name and the added dependency of the master nodes module. The master nodes terraform module outputs the required data the agents need to successfully join the rest of the cluster which is passed through the input cluster_data.

However for the most part this configuration is nearly identical to that of the master nodes and should require very little additional configuration. Once you have saved your terragrunt.hcl you can then deploy:

terragrunt plan

# this will produce a lot of output and you should review it prior to applying

terragrunt apply

# make sure to type yes when prompted or you will sit there and nothing will happen

After you apply this your agents should start joining your cluster. A quick way to check the status and health of your cluster is to look at the instances within the scale set inside the Azure portal.

The health state is based on the control plane loadbalancer's communication with the supervisor port and the Kubernetes API port, so you will not see the state in your agents. If your masters are healthy you can check your workers with the kubeconfig and kubectl.

# Make sure you are in the right Kubernetes context

export KUBECONFIG=~/.kube/that_file_you_made_earlier

kubectl get nodes

The ROLES column will differentiate your masters from agents. All your nodes should be there and their STATUS should all read Ready.

ROLES AGE VERSION

<none> 50d v1.20.7+rke2r1

etcd,master 50d v1.20.7+rke2r1

<none> 50d v1.20.7+rke2r1

etcd,master 50d v1.20.7+rke2r1

<none> 50d v1.20.7+rke2r1

The manual process to bring master nodes online will be fixed as the terraform module matures, but hopefully this account of successfully deploying RKE2 to Azure Government aids the pain of deployment and expedites your process in deployment. I am certain there are variables I have not accounted for, so if you run into anything not covered within this guide, open a Github issue so that it can be tracked, documented, and fixed for all who rely on open source software and solutions to get shit done.